docker logs, kubectl logs能看到Docker容器的标准输出、标准错误,方便定位问题。而 xxx logs之所以能看到,是因为标准输出、标准错误存储在每个容器独有的日志文件中。

另外日志量大了,用docker logs看历史数据不大合适。我们就需要考虑将日志存储到日志中心去。

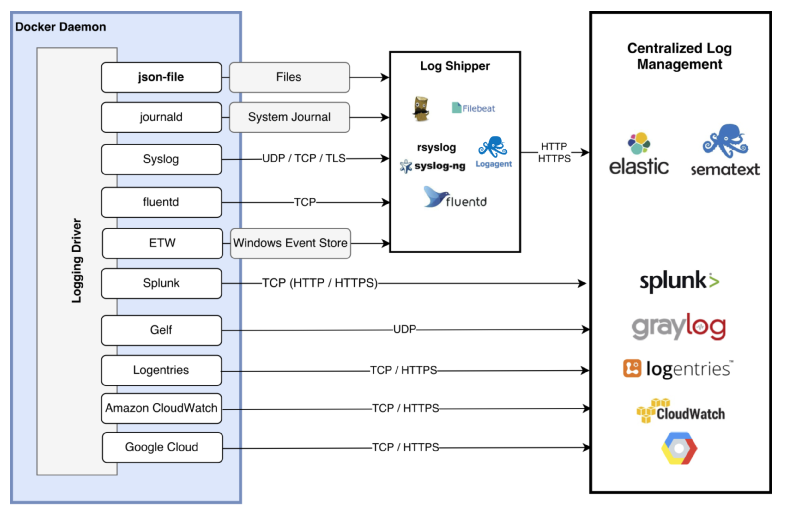

Docker默认支持如下日志驱动。有直接写文件的,有使用云服务的。下面简单介绍下。

credit: https://jaxenter.com/docker-logging-gotchas-137049.html

官方文档 https://docs.docker.com/config/containers/logging/configure/

默认驱动:json-file

默认的Logging Driver是json-file。docker info可以查看。全局的日志驱动设置,可以修改daemon配置文件 /etc/docker/daemon.json。

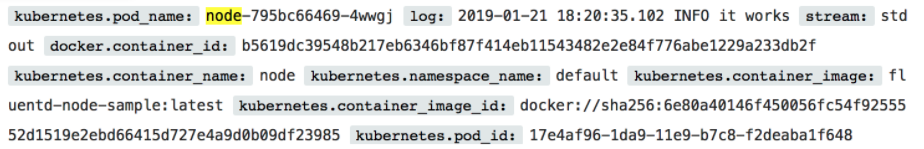

写入文件的日志格式长这样:{"log":"Log line is here\n","stream":"stdout","time":"2019-01-01T11:11:11.111111111Z"},每一行是一个json文件,log字段为容器原来输出的每行内容。

默认配置,创建的容器的信息在这个目录下: /var/lib/docker/containers。

实验

root@ubuntu-parallel:~# docker run --name default_logging_driver hello-world

root@ubuntu-parallel:~# cd /var/lib/docker/containers/$(docker ps --no-trunc -aqf "name=default_logging_driver")

root@ubuntu-parallel:~# cat $(docker ps --no-trunc -aqf "name=default_logging_driver")-json.log

{"log":"\n","stream":"stdout","time":"2020-04-02T01:46:54.096347888Z"}

{"log":"Hello from Docker!\n","stream":"stdout","time":"2020-04-02T01:46:54.096377382Z"}

{"log":"This message shows that your installation appears to be working correctly.\n","stream":"stdout","time":"2020-04-02T01:46:54.096381118Z"}

{"log":"\n","stream":"stdout","time":"2020-04-02T01:46:54.096383725Z"}

https://docs.docker.com/config/containers/logging/json-file/